Beginner's guide to Convolutional neural network

The idea of convolutional neural networks is inspired by biological neural networks. Let's break down this neural network and try to understand it step by step.

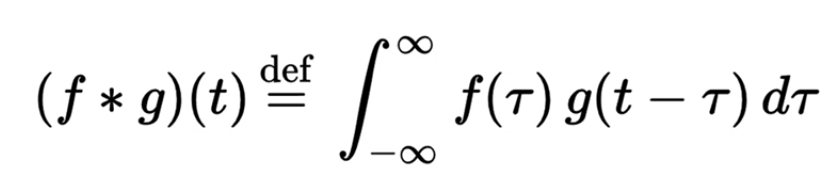

Firstly the convolution is a mathematical method for combining two signals and is one of the most important techniques in Digital signal processing.In other words it is just an integral calculating how much two functions overlap when they pass each other.

“The green curve shows the convolution of the blue and red curves as a function of t, the position indicated by the vertical green line. The gray region indicates the product as shown below as a function of t, so its area as a function of t is precisely the convolution.”

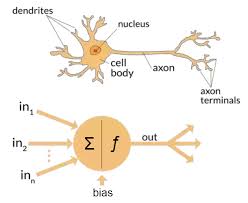

As i had mentioned earlier that convolutional neural networks are inspired by biological neural networks

BASICS TERMINOLOGIES IN NEURAL NETWORKS

1.Neuron:

Let's assume that we get some information, now what do we do when we receive information?

We process the information and generate an output. Similarly even in neural networks when a neuron receives an input it processes the input and generates an output.

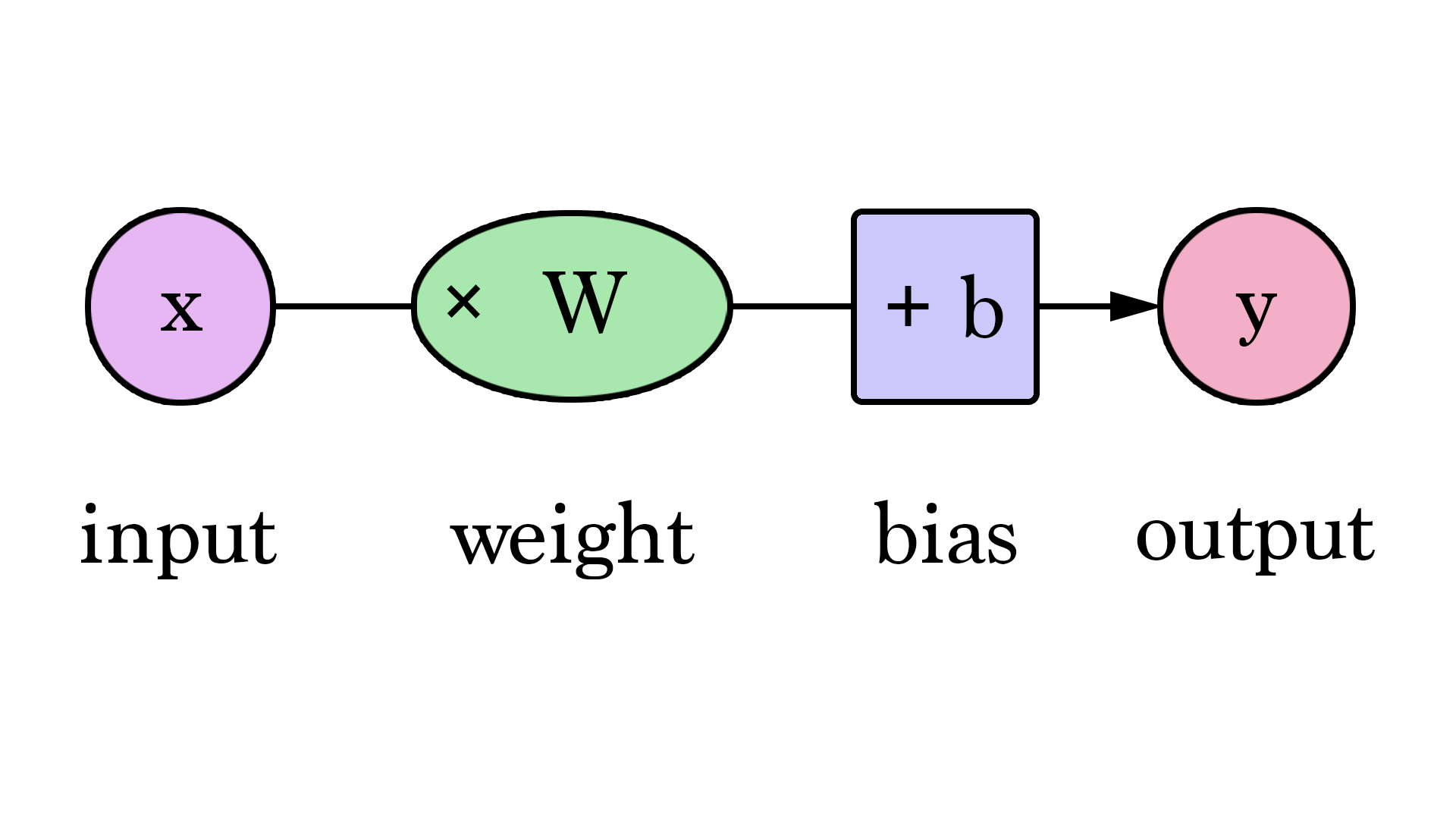

2.Weights:

Weights are basically a parameter with neural network that transforms the input into hidden layer of network.As the input enters the node it gets multiplied by the weight value and is passed to the next layer.Bias is an additional parameter in the Neural Network which is used to adjust the output along with the weighted sum of the inputs to the neuron

3.Bias

Bias is an additional parameter in the Neural Network which is used to adjust the output along with the weighted sum of the inputs to the neuron.

Equation:

output = sum (weights * inputs) + bias

4.Activation function

This function decides whether a neuron should be activated or not by adding weighted sum with the bias.The purpose of the activation function is to introduce non-linearity into the output of a neuron.

Most widely used activation functions are:

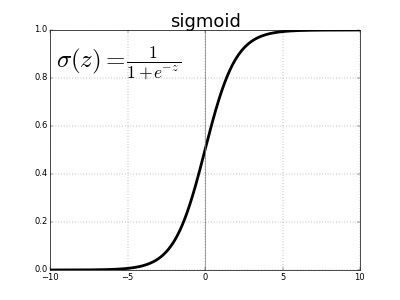

4.1 Sigmoid function:

A sigmoid function is a bounded, differentiable, real function that is defined for all real input values and has a non-negative derivative at each point and exactly one inflection point.

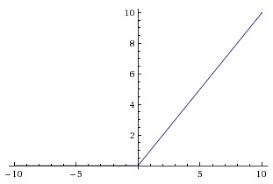

4.2 ReLu(Rectifier Linear units):

The rectifier is an activation function defined as the positive part of its argument.

f(x) = max(x,0)

where x is the input to a neuron and the output of the function is X when X>0 and 0 for X<=0.

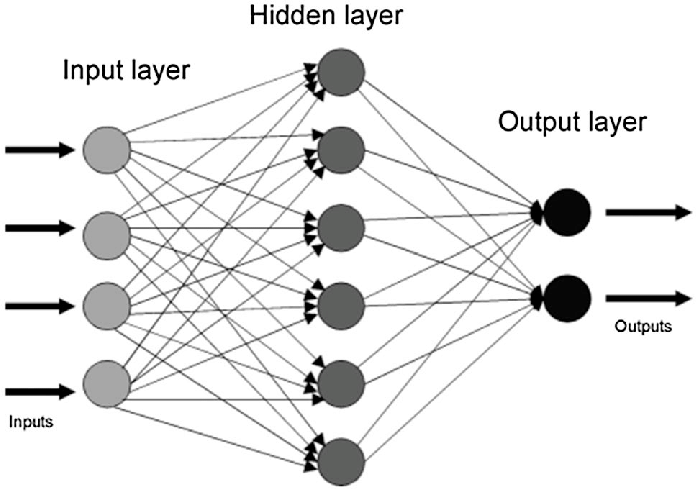

5.Elements of neural network

The three basic elements of neural network are

- Input Layer

- Hidden Layer

- Output Layer

6.Learning process in neural network

6.1 Forward propagation

Forward propagation involves calculation and storage of intermediate variables in order from input layer to output layer.In this layer the information travels in forward direction from input layer through hidden layer and generates output in output layer.

6.2 Backward propagation

This actually a short form of "Backward propagation of errors". The basic idea of back propagation is to fine tune weights of neural nets on the error rate obtained in previous epochs or iterations.

7. Loss function

A loss function is used to optimize the parameter values in a neural network model. Loss functions map a set of parameter values for the network onto a scalar value that indicates how well those parameters accomplish the task the network is intended to do.

References

https://www.analyticsvidhya.com/blog/2017/05/25-must-know-terms-concepts-for-beginners-in-deep-learning/

https://qph.fs.quoracdn.net/main-qimg-6b67bea3311c3429bfb34b6b1737fe0c

https://images.deepai.org/django-summernote/2019-06-03/e20ff932-4269-4bed-82fb-383b0f1ce96d.png

https://miro.medium.com/max/875/0*O2eWM0x2Jnb1CBWG

https://miro.medium.com/max/450/0*yPJfAA0d6TmYfKwg.gif

https://www.researchgate.net/profile/Murat_Kirisci/publication/332083969/figure/fig1/AS:745473979719680@1554746255709/Neural-network-model-layers-input-hidden-output-Source-Ramesh-et-al-35.png